[ICRA 2024] Robust Collaborative Perception without External Localization and Clock Devices

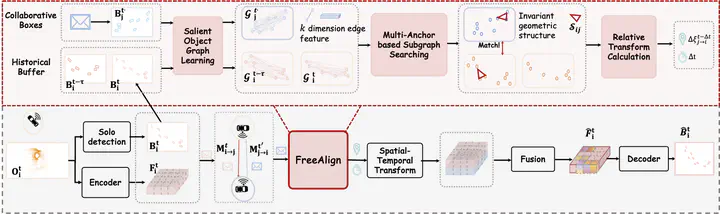

Overview of FreeAlign

Overview of FreeAlign

Abstract

A consistent spatial-temporal coordination across multiple agents is fundamental for collaborative perception, which seeks to improve perception abilities through informa-tion exchange among agents. To achieve this spatial-temporal alignment, traditional methods depend on external devices to provide localization and clock signals. However, hardware-generated signals could be vulnerable to noise and potentially malicious attack, jeopardizing the precision of spatial-temporal alignment. Rather than relying on external hardwares, this work proposes a novel approach aligning by recognizing the inherent geometric patterns within the perceptual data of various agents. Following this spirit, we propose a robust collaborative perception system that operates independently of external localization and clock devices. The key module of our system, FreeAlign, constructs a salient object graph for each agent based on its detected boxes and uses a graph neural network to identify common subgraphs between agents, leading to accurate relative pose and time. We validate FreeAlign on both real-world and simulated datasets. The results show that, the FreeAlign empowered robust collaborative perception system perform comparably to systems relying on precise localization and clock devices. We will release code related to this work.